更新時(shí)間:2021-08-05 16:52:56 來(lái)源:動(dòng)力節(jié)點(diǎn) 瀏覽8347次

在爬蟲中cookie是非常有用的,可以解決反爬,封號(hào)等問(wèn)題。接下來(lái)我們來(lái)說(shuō)說(shuō)獲取cookie的集中方式。

這里采用python2.7,本來(lái)我都是用python3.6的,來(lái)了公司之后,公司適用版本2.7,就2.7咯,反正就寫法上面有一些區(qū)別

第一種:mechanize

首先我們要使用mechanize,第一步:

pip install mechanize

第二步編寫獲取cookie代碼:

import os

import mechanize

import cookielib,re

br = mechanize.Browser()

cj = cookielib.LWPCookieJar()

br.set_cookiejar(cj)

br.set_handle_equiv(True)

br.set_handle_gzip(True)

br.set_handle_redirect(True)

br.set_handle_referer(True)

br.set_handle_robots(False)

br.set_handle_refresh(mechanize._http.HTTPRefreshProcessor(), max_time=1)

br.set_debug_http(True)

br.addheaders = [('User-agent', '用戶ua')]

br.set_proxies({"http": "代理"})

response = br.open('https://www.amazon.com')

cj = br._ua_handlers['_cookies'].cookiejar

for cookie in cj:

print("cookieName:"+cookie.name)

print("cookieValue:"+cookie.value)

cookie = [item.name + ":" + item.value for item in cj]

cookiestr={}

for item in cookie:

name,value = item.split(":")

cookiestr[name]=value

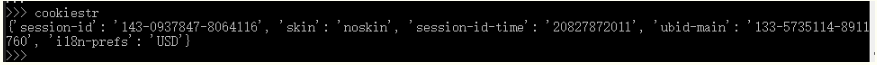

運(yùn)行結(jié)果:

第二種:urllib

import urllib2

import cookielib

from http import cookiejar

from bs4 import BeautifulSoup

User_Agent = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36'

header = {}

header['User-Agent'] = User_Agent

cookie = cookiejar.CookieJar()

cookie_handle=urllib2.HTTPCookieProcessor(cookie)

cookie_opener = urllib2.build_opener(cookie_handle)

# proxy_support = urllib2.ProxyHandler({"http":"5.62.157.47:8085"})

# proxy_opener = urllib2.build_opener(proxy_support)

urllib2.install_opener(cookie_opener)

# urllib2.install_opener(proxy_opener)

request = urllib2.Request("https://www.amazon.com",headers=header)

response = urllib2.urlopen(request)

for item in cookie:

print('Name = ' +item.name)

print('Value =' +item.value)

運(yùn)行結(jié)果:

第三種:requests

import requests

headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36'}

r = requests.get('https://www.amazon.com', headers = headers)

for cookie in r.cookies:

print(cookie.name)

print(cookie.value)

print("=========")

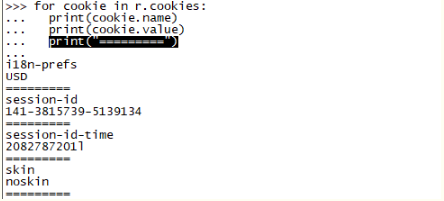

運(yùn)行結(jié)果:

第四種:selenium(個(gè)人感覺(jué)這個(gè)雖然加載比較慢,但是獲取cookie最全)

pip install selenium

代碼:

from selenium import webdriver

driver = webdriver.Chrome(executable_path='d:/seop/chromedriver.exe')

driver.get("https://www.amazon.com")

#for c in cookiestr.keys():

# driver.add_cookie({'name':c,'value':cookiestr[c]})

#driver.get("https://www.amazon.com")

cookie = [item["name"] + "=" + item["value"] for item in driver.get_cookies()]

cookiestr = ';'.join(item for item in cookie)

運(yùn)行結(jié)果:

以上就是動(dòng)力節(jié)點(diǎn)小編介紹的"獲取cookie的幾種方式",希望對(duì)大家有幫助,想了解更多可查看cookie工作原理。動(dòng)力節(jié)點(diǎn)在線學(xué)習(xí)教程,針對(duì)沒(méi)有任何Java基礎(chǔ)的讀者學(xué)習(xí),讓你從入門到精通,主要介紹了一些Java基礎(chǔ)的核心知識(shí),讓同學(xué)們更好更方便的學(xué)習(xí)和了解Java編程,感興趣的同學(xué)可以關(guān)注一下。

Java實(shí)驗(yàn)班

Java實(shí)驗(yàn)班

0基礎(chǔ) 0學(xué)費(fèi) 15天面授

Java就業(yè)班

Java就業(yè)班

有基礎(chǔ) 直達(dá)就業(yè)

Java夜校直播班

Java夜校直播班

業(yè)余時(shí)間 高薪轉(zhuǎn)行

Java在職加薪班

Java在職加薪班

工作1~3年,加薪神器

Java架構(gòu)師班

Java架構(gòu)師班

工作3~5年,晉升架構(gòu)

提交申請(qǐng)后,顧問(wèn)老師會(huì)電話與您溝通安排學(xué)習(xí)